OVERLOAD

The work load of each exercise session must exceed the normal demands placed on the body in order to bring about a training effect.

THE COMPONENTS OF PHYSICAL FITNESS ARE:

-

Cardiorespiratory (CR) endurance – the efficiency with which the body delivers oxygen and nutrients needed for muscular activity and transports waste products from the cells.

-

Muscular strength – the greatest amount of force a muscle or muscle group can exert in a single effort.

-

Muscular endurance – the ability of a muscle or muscle group to perform repeated movements with a sub-maximal force for extended periods of times.

-

Flexibility – the ability to move the joints or any group of joints through an entire, normal range of motion.

-

Body composition – the percentage of body fat a person has in comparison to his or her total body mass.

nutritional guidance, A private, personal training facility weight loss diets, Personal Fitness online trainer, Gym, national academy of Sports Rodeo CA| (510) 375-7227

PRINCIPALS OF EXERCISE

Adherence to certain basic exercise principles is important for developing an effective program. The same principles of exercise apply to everyone at all levels of physical training, from the Olympic-caliber athlete to the weekend jogger.

These basic principles of exercise must be followed.

REGULARITY

To achieve a training effect, you must exercise often. You should exercise each of the first four fitness components at least three times a week. Infrequent exercise can do more harm than good. Regularity is also important in resting, sleeping, and following a sensible diet.

Dedicated 2 Fitness is a private, personal training facility; dedicated to health and fitness, Find a Personal Trainer, (510) 375-7227 Personal Trainer in Hercules CA, Weight Loss Physical FITNESS & HEALTH TRAINER: With expertise in functional training, strength trainingpersonal trainer in Hercules ca Rodeo CA, We Can Help. Our Personal Trainers Will Get You Results Faster Than You Thought Possible. Find a Personal TrainerSlim fast, weight loss diets, How much does a personal trainer cost in Rodeo? Top 10 Best Personal Trainer in Pinole, Hercules, El Sobrante CA, (510) 375-7227Personal Training • Weight Loss, Strength Performance in Rodeo CA, Personal Training Fitness training personal trainer Find a Personal Trainer

simple meal plan to lose weight free personal trainer in Hercules ca Personal Fitness Training in Hercules CA | Fitness Center

DEDICATED TO FITNESS LLC

A private, personal training facility; dedicated to health and fitness, that focuses on weight loss, toning up, cardiovascular training, nutritional guidance, and overall changes to improve your lifestyle for years to come.

TAKE ACTION

7-day diet plan for weight loss Fitness, training, Planning out a successful weight loss plan in Rodeo CA, EL Sobrante CA, Find a Personal Trainer, (510) 375-7227

beginner diet plan for weight loss for female Slim fast, weight loss diets, Personal Fitness online trainer, Gym, national academy of Sports, Find a Personal Trainer, (510) 375-7227

Ready to take the next step? Start by calling or emailing to schedule your free consultation!

Slim fast, weight loss diets, Personal Fitness online trainer, Gym, national academy of Sports, Find a Personal Trainer, (510) 375-7227

Personal Training • Weight Loss, Strength Performance in Rodeo CA, Personal Training Fitness training personal trainer Find a Personal Trainer

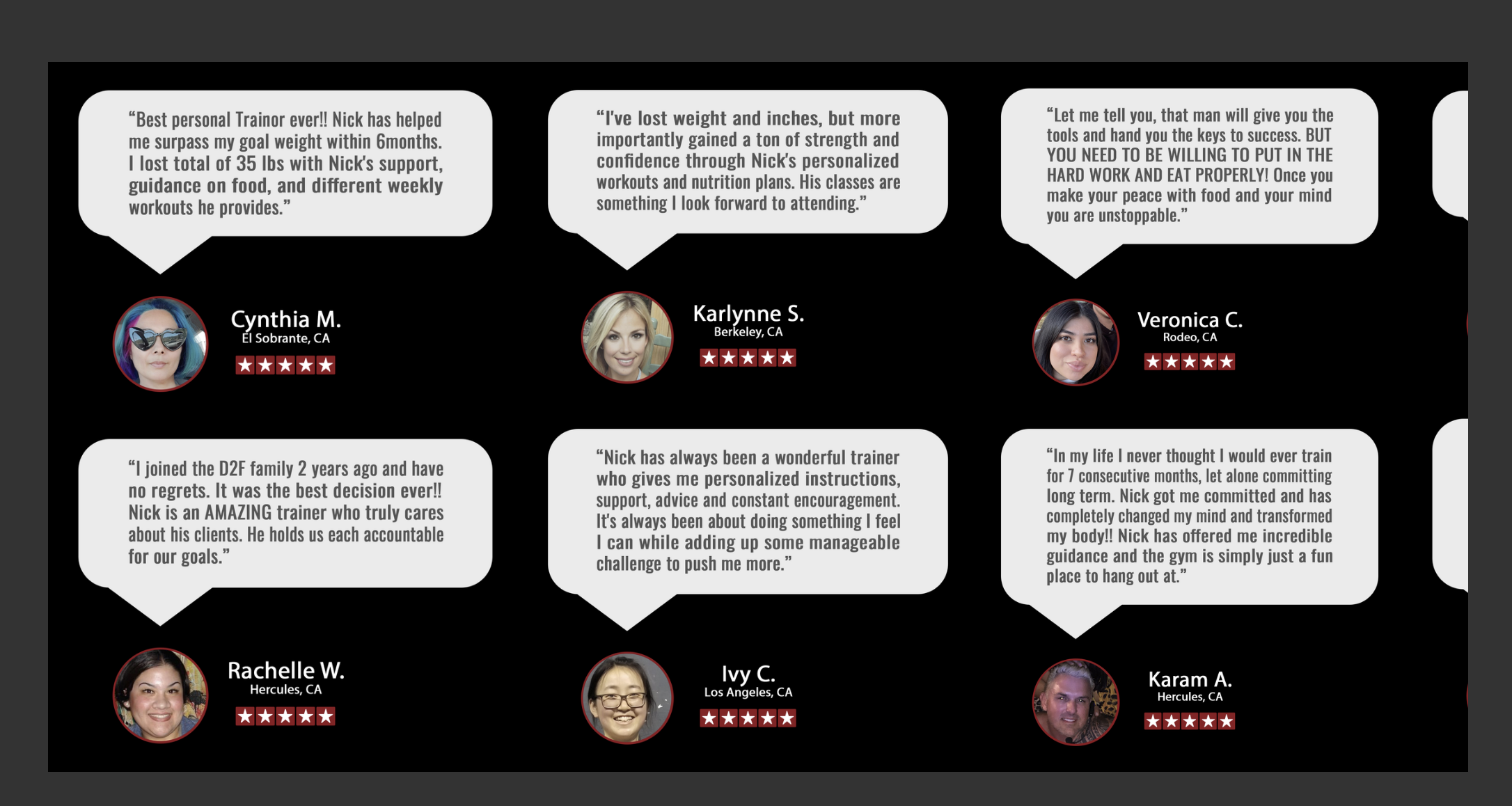

With his expertise in functional, strength, endurance, and core training, Nick has had the privilege of helping clients achieve even the most challenging fitness goals. He takes pride in his ability to employ innovative training methods and motivational techniques that lead to significant body transformations. His approach emphasizes functional movements that require minimal or no equipment, enabling clients to work out virtually anywhere without the need to wait for gym equipment.

Furthermore, Nick’s dedication extends beyond physical fitness; he’s committed to assisting individuals in breaking free from monotonous and sedentary lifestyles. He provides the tools and guidance needed to boost energy levels, enhance overall well-being, and implement nutrition programs that reduce, prevent, or eradicate medical crises. These programs also improve sleep patterns, reduce stress, and empower individuals to lead healthier lives. His genuine care and support also extend to helping people overcome negative thought patterns and limiting beliefs that may hinder their progress. Nick’s diverse client base ranges from inactive high school girls and single mothers/fathers to older retired individuals seeking to stay active and healthy.

weight loss, toning up, cardiovascular training personal trainer in Hercules ca Rodeo CA, We Can Help. Find a Personal Trainer (510) 375-7227

Dedicated 2 Fitness is a private, personal training facility; dedicated to health and fitness, Find a Personal Trainer, (510) 375-7227

Nick brings more to the table than just his passion for fitness. He holds a Bachelor of Fine Arts degree and boasts 14+ years of experience with a personal trainer certification from the National Academy of Sports Medicine. Additionally, he’s earned many specialty certifications, including one as a Fitness Nutrition Specialist, and is currently enrolled in yet another nutrition-focused certification program known as CNCs. He has also completed his MMA Conditioning Specialist and Integrated SA&Q Training certifications, along with a number of additional certifications focusing on the mental side of one’s health and fitness journey. This includes his Behavior Change Specialists and Mental Toughness certifications. To round it all out, he is also certified in Neuromuscular Stretching, Balance Training, Flexibility Training, Core Training, and Cardio for Fitness/Performance.

nutritional guidance, cardiovascular training personal trainer Weight Loss, Strength Performance in Rodeo CA, personal trainer A private, personal training facility

Fitness weight loss training, cardiovascular training Nicholas smith fitness Weight Loss Centers Near me Hercules, CA, Personal trainer near me in Rodeo CA, Pinole CA, (510) 375-7227

simple meal plan to lose weight Slim fast, weight loss diets, Personal Fitness online trainer, Gym, national academy of Sports Rodeo CA| (510) 375-7227

PERSONAL TRAINER IN HERCULES CA, WEIGHT LOSS PHYSICAL FITNESS & HEALTH TRAINER: WITH EXPERTISE IN FUNCTIONAL TRAINING, STRENGTH TRAINING

Nick’s commitment to ongoing learning and staying up-to-date with the latest in fitness education is unwavering. He also has plans to add CES (Corrective Exercise Specialist), PES (Performance Enhancement Specialist), WLS (Weight Loss Specialist), and WFS (Women’s Fitness Specialist) to his repertoire down the line.

In his personal life, Nick’s dedication to physical fitness and healthy eating is fueled by his passion for staying active. Over the years, he’s been involved in many competitive sports including baseball, soccer, gymnastics, volleyball, and football. When he’s not in the gym now, he’s an avid reader, a passionate gamer, an amateur furniture maker, a recent plant daddy, a geeky costume designer, and he thoroughly enjoys engaging in intellectual debates.

PROGRESSION

The intensity (how hard) and/or duration (how long) of exercise must gradually increase to improve the level of fitness.

BALANCE

To be effective, a program should include activities that address all the fitness components, since overemphasizing any one of them may hurt the others.

VARIETY

Providing a variety of activities reduces boredom and increases motivation and progress.

SPECIFICITY

Training must be geared toward specific goals. For example, people become better runners if their training emphasizes running. Although swimming is great exercise, it does not improve a 2-mile-run time as much as a running program does.

Dedicated2Fintness: (510) 375-7227, how to lose weight fast naturally and permanently Slim fast, weight loss diets

5 components of physical fitness

Physical fitness is the ability to function effectively throughout your workday, perform your usual other activities and still have enough energy left over to handle any extra stresses or emergencies which may arise.

meal plan for weight loss female Rodeo CA, Hercules CA – Fitness weight loss training, Personal before-after diet fads like Slim fast,

Do you want a sexy, perky butt? weight loss diets, Personal Fitness online trainer, Gym, national academy of Sports Rodeo CA | (510) 375-7227

simple meal plan to lose weight Slim fast, weight loss diets, Personal Fitness online trainer, Gym, national academy of Sports Rodeo CA| (510) 375-7227

RECOVERY

A hard day of training for a given component of fitness should be followed by an easier training day or rest day for that component and/or muscle group(s) to help permit recovery. Another way to allow recovery is to alternate the muscle groups exercised every other day, especially when training for strength and/or muscle endurance.

Improving the first three components of fitness listed above will have a positive impact on body composition and will result in less fat. Excessive body fat detracts from the other fitness components, reduces performance, detracts from appearance, and negatively affects your health.

Factors such as speed, agility, muscle power, eye-hand coordination, and eye-foot coordination are classified as components of “motor” fitness. These factors most affect your athletic ability. Appropriate training can improve these factors within the limits of your potential. A sensible weight loss and fitness program seeks to improve or maintain all the components of physical and motor fitness through sound, progressive, mission specific physical training.